Connecting to all your Sitecore Docker Container services using GUI tools

Kamruz Jaman - Solution Architect

6 Oct 2023

When working on Sitecore projects, the use of Docker and Containers has risen over the past few years. It's certainly gained a lot of traction over the past few years, even if local installation is still very popular. You will have no choice except to use containers for development though if you are working on XM Cloud solutions though, and we have found it very useful for local development, esp when working across multiple projects at the same time, onboarding new team members and generally spinning up quick instances for R&D purposes.

As great as Docker and containers are, we are all creatures of comfort, and when developing locally sooner or later you will need to debug an issue. And having spent the majority of our programming lives using local instances of everything, we will reach for the tools that we are most familiar with. But how do we use these tools with our containers?

I previously shared an article about Tooling to help debug your Sitecore Docker Containers, please also have a read for more debugging tips.

Remotely connecting your container SQL Server instance

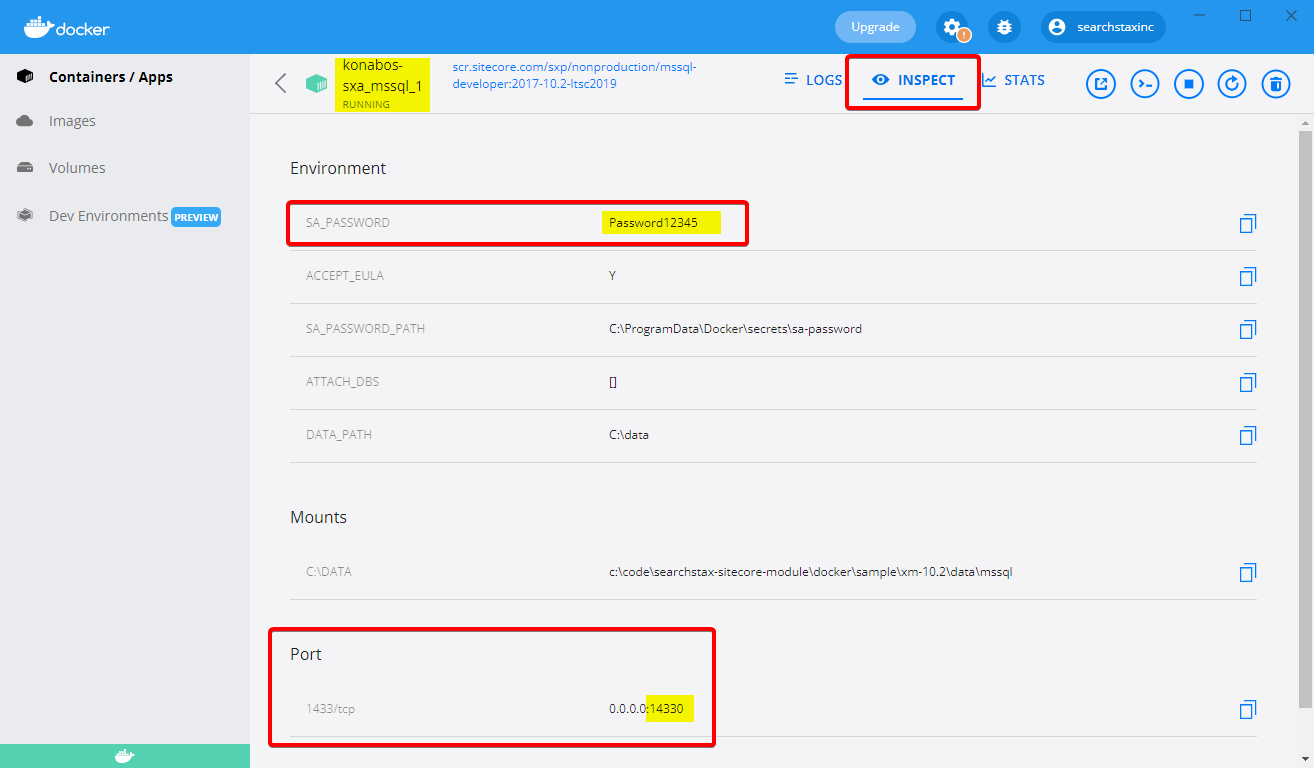

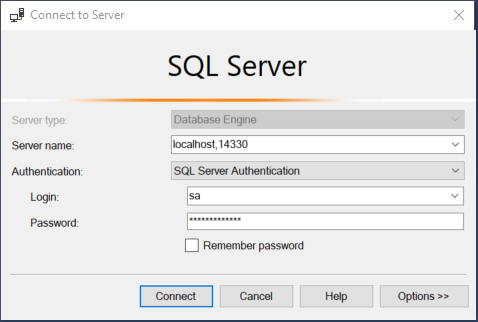

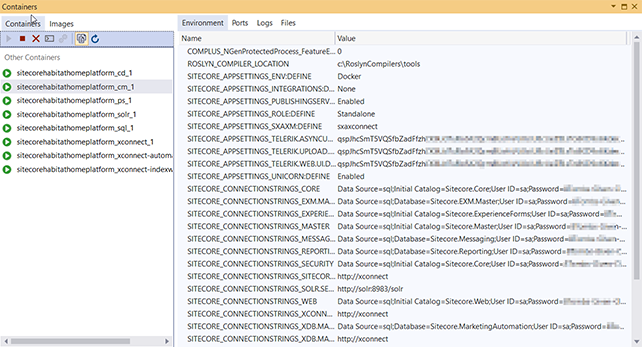

Connecting to your SQL Server in the container is surprisingly easy, you just need to the correct port number and login/password, all of which are available from your .env file and docker-compose files. You can also inspect the running containers, either the details on the SQL container itself or the connection string details in the CM container.

When connecting to the instance using SQL Server Management Studio, the format of the server name should be localhost, port: Log in using the sa account, and the password you set in your env file.

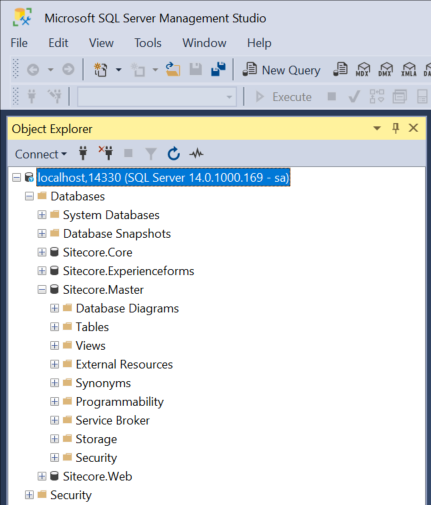

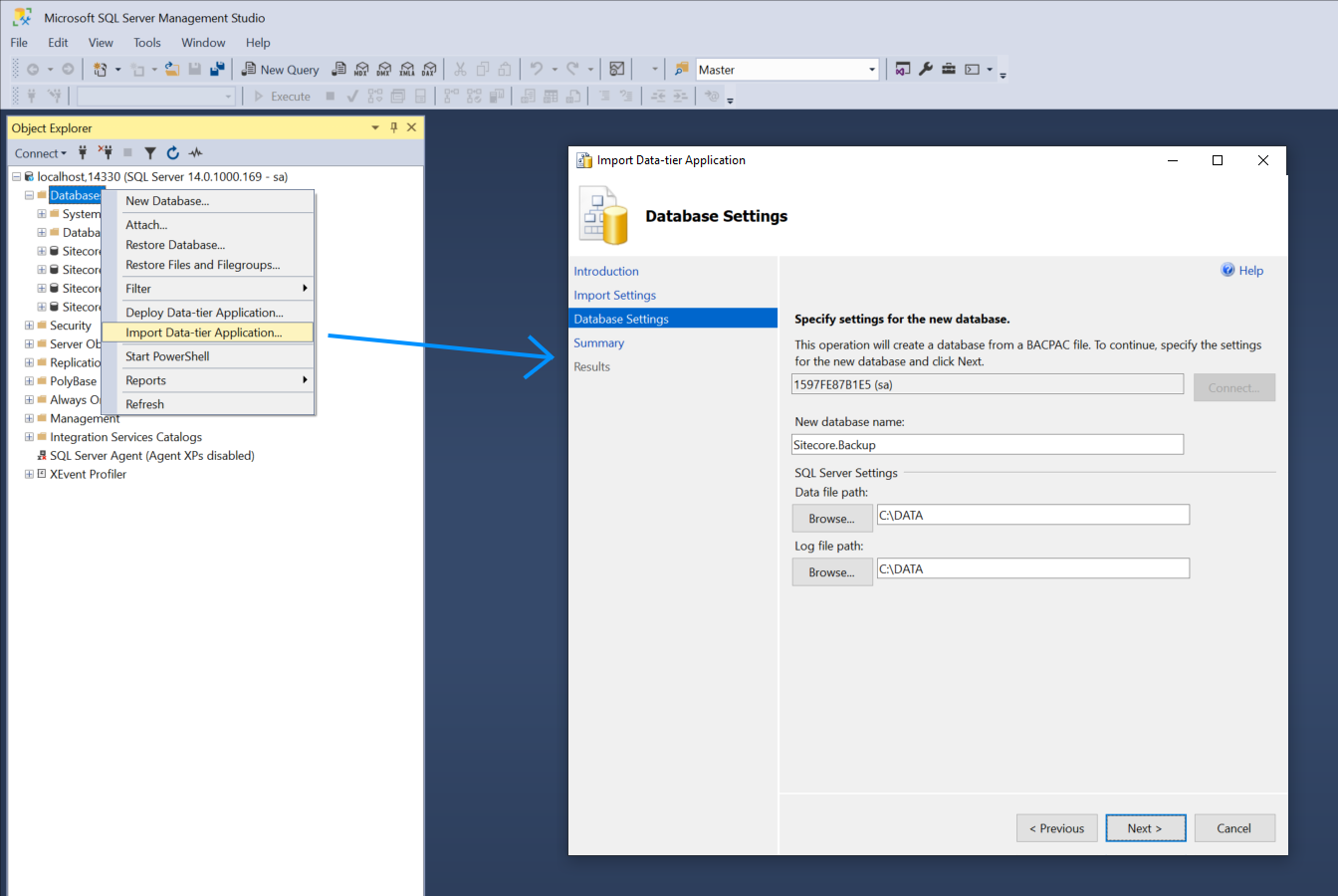

You should now have access to all the databases and be able to view the data in the tables or run whatever queries you need.

You can also restore database backups using SMSS. However it's important to set the restore folder to C:\data, since that folder is normally set as a volume mount to the host environment. Remember, you are the setting the folder you want the database to be restored to inside the Docker container, not the folder location on your local computer.

Accessing your Solr instance

Again another straight forward one, open up the following url in your browser. Update the port number to match your local set up.

Now you can check your indexes and run any queries for debugging purposes.

Remotely connecting your container IIS instance

If you're a Powershell wiz, then in theory you can manage the entire IIS instance using the IIS Administration cmdlets. And whilst I occasionally win the PowerShell battle after a lot of Googling and some help from ChatGPT, my everyday usage is limited. Limited enough to know that I am way more comfortable with the familiar Internet Information Service (IIS) Manager GUI.

It’s possible to connect to your container instance using the IIS Manager, but this requires 2 steps.

First, download and install IIS Manager for Remote Administration 1.2 on your local machine.

- https://www.microsoft.com/en-us/download/details.aspx?id=41177

- https://www.iis.net/downloads/microsoft/iis-manager

Now you can connect to a remote IIS instance: https://learn.microsoft.com/en-us/iis/manage/remote-administration/configuring-remote-administration-and-feature-delegation-in-iis-7#connect-to-a-site-or-an-application-in-iis-manager

But before we are able to actually connect, we need to enable remote connections within the container instance itself.

- Connect to the CM container using PowerShell

docker exec -it konabos-sxa_cm_1 powershell - Run the commands below

- 🧙

1Add-WindowsFeature web-mgmt-service

2

3powershell New-ItemProperty -Path HKLM:\SOFTWARE\Microsoft\WebManagement\Server -Name EnableRemoteManagement -Value 1 -Force

4

5net user kamruz "Password12345" /add

6

7Add-LocalGroupMember -Group administrators -Member kamruz

8

9restart-Service wmsvcSince typing lots of commands is too much work, I have a ps script file with this code, copy it to the folder mount (./data/cm/website) and then run the script having connected to the instance using PowerShell. You could also copy the script across or run these commands as part of your container build scripts. I don't find it to be required frequently enough that a one-off copy as above is sufficient.

We need the IP address of our container instance. Run the following to get that information:

1docker ps

2

3docker inspect -f '{{range.NetworkSettings.Networks}}{{.IPAddress}}{{end}}' <container-ID>

Now we can connect to our Docker container IIS instance using our local IIS Manager GUI

Have a look around, poke around with the settings, figure out what solves your problem, then work back from that to figure out what PowerShell you need to run in your build scripts.

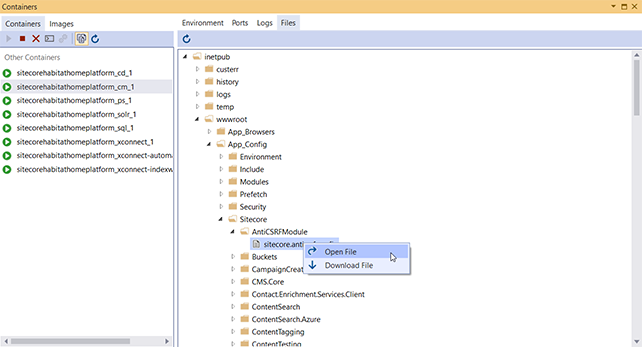

Viewing the content of the File System

Sooner or later you will want to take a look the filesystem of the container instance. There are a number of options, some which I shared in my previous article but let's go through them all.

PowerShell

Connect to your container using PowerShell and then you can view the filesystem using the commandline.

docker exec -it konabos-sxa_cm_1 powershell

Since the commandline is quite limiting with the tooling available, I find it useful to copy files across to the mounted drive, located at C:\deploy in the container instance, and then I can use my local editors to better view the files.

VS Code

The Docker tooling in VS Code is extremely useful and one of my go to options. I wrote about this in the previous post about Tooling to help debug your Sitecore Docker Containers.

To get going with this install the following extension:

https://marketplace.visualstudio.com/items?itemName=ms-azuretools.vscode-docker

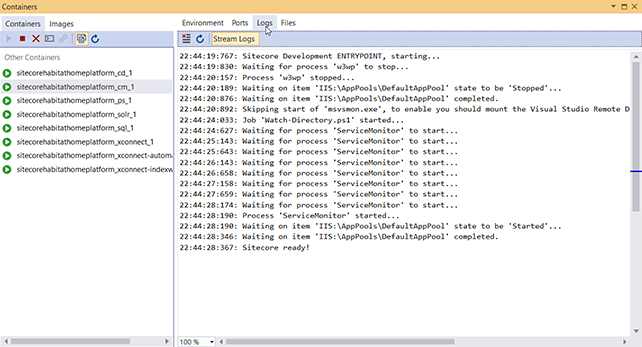

You can view the details of the running containers, browse the filesystem, view the files and also download them to your local machine. You can also inspect the details of the containers, as well as very easily attach to the shell (commandline).

Visual Studio

Not to be outdone, the original bigger brother also has some great tooling for Docker, which I also wrote about in my previous post. The tooling is almost the same as the VS Code extension above and lets you so the same kind of things.

Viewing the content of Init and Asset containers

In Sitecore 10.1 there was the introduction of Init containers for various initialization tasks, namely for SQL Server and Solr. These containers startup, carry out some tasks, and then promptly shut down. Other images such as Asset images for modules and Tooling images are also not meant to be run but instead utilised in your own layering and build process.

Since these images are a blackbox and we do not have any details of what is contained in them it can be useful to take a look around every once in a while.

To run these images and prevent them shutting down immediately you can run the following docker command:

1docker run -it --entrypoint cmd scr.sitecore.com/sxp/modules/sitecore-spe-assets:6.3-1809

2

3docker run -it --entrypoint powershell scr.sitecore.com/sxp/modules/sitecore-sxa-xm1-assets:10.2-1809Setting the --entrypoint to cmd or powershell means the container will continue to run while you keep the terminal session open. Once the container is running you can look around the filesystem from the commandline or use the VS Code/Visual Studio methods that are listed above.

The use of Docker and Containers with Sitecore provides a very powerful and flexible environment for development, but mastering the new ways of working can seem daunting. Hopefully you found these tips and insights into the tooling useful and can see that it is not all that much different than you have been doing all along on your local machine anyway.

Kamruz Jaman

Kamruz is a 14-time Sitecore MVP who has worked with the Sitecore platform for more than a decade and has over 20 years of development and architecture experience using the Microsoft technology stack. Kamruz is heavily involved in the Sitecore Community and has spoken at various User Groups and Conferences. As one of the managing partners of Konabos Inc, Kamruz will work closely with clients to ensure projects are successfully delivered to a high standard.

Share on social media